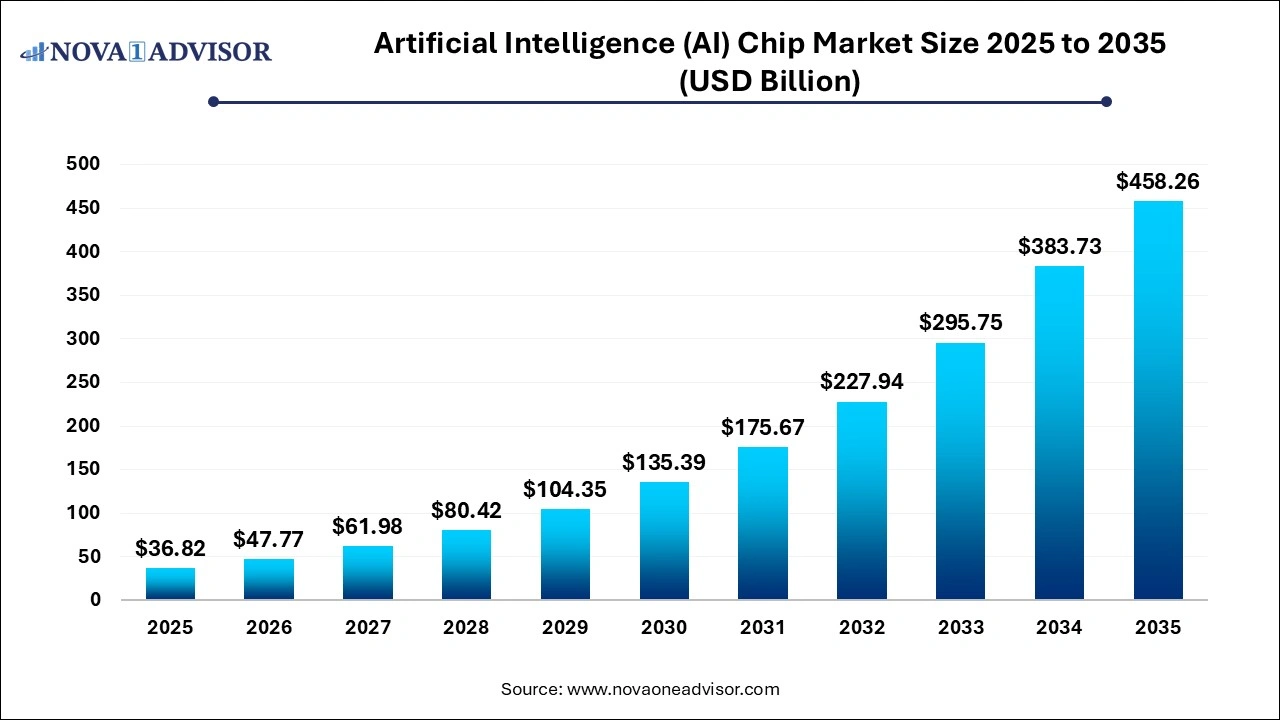

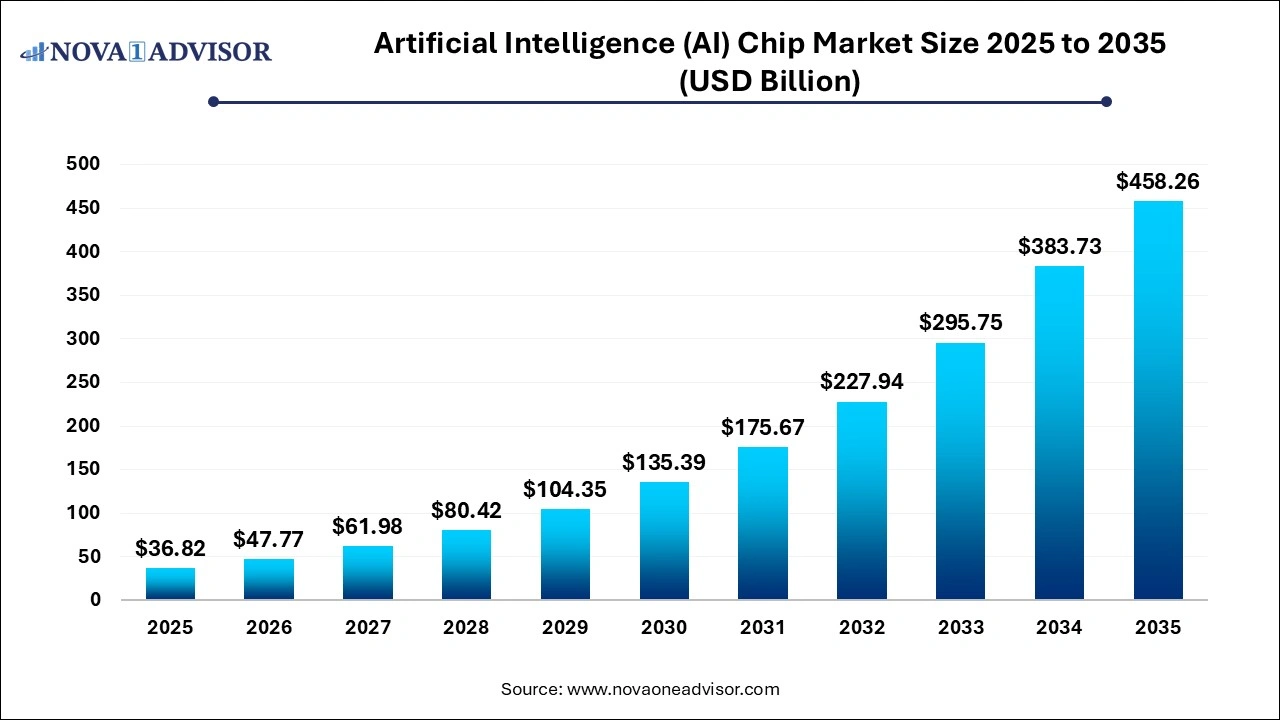

Artificial Intelligence (AI) Chip Market Size, Growth and Trends 2026 to 2035

The global artificial intelligence (AI) chip market size was exhibited at USD 36.82 billion in 2025 and is projected to hit around USD 458.26 billion by 2035, growing at a CAGR of 29.73% during the forecast period 2026 to 2035.

Key Pointers:

- By Geographically, North America region dominates the global market.

- By technology, the machine learning segment dominates the global market.

- By chip type, the CPU segment is the leading the global market.

- By processing type, the edge segment accounted more than 75% of revenue share in 2022.

- By function, the inference segment is growing at a significant rate.

- By end-users, the BFSI segment holds the maximum revenue share and is predicted to maintain growth from 2023 to 2032.

Artificial Intelligence (AI) Chip Market Report Scope

| Report Coverage |

Details |

| Market Size in 2026 |

USD 47.77 Billion |

| Market Size in 2026 |

USD 458.26 Billion |

| Growth Rate From 2026 to 2035 |

CAGR of 4.82% |

| Base Year |

2025 |

| Forecast Period |

2026 to 2035 |

| Segments Covered |

By Technology, By Chip Type, By Processing Type, By Function, By End-Users |

| Market Analysis (Terms Used) |

Value (USD Million/Billion) or (Volume/Units) |

| Regional scope |

North America; Europe; Asia Pacific; Latin America; MEA |

| Key Companies Profiled |

NVIDIA Corporation, General Vision Inc., Amazon Web Services

Google Inc., Microsoft Corporation, Advanced Micro Devices Inc. |

Artificial Intelligence (chipsets) market dynamics

Driver: The emerging trend of autonomous vehicles

Autonomous vehicles rely on a combination of sensors, cameras, radar, lidar, and other technologies to perceive their surroundings accurately. AI (chipsets) plays a crucial role in processing the vast amount of real-time data generated by these sensors. The chipsets accelerate perception tasks such as object detection, tracking, and classification, allowing the vehicle to make informed decisions based on the analyzed data. The need for powerful AI (chipsets) capable of handling complex perception tasks is essential to enable safe and efficient autonomous driving.

Autonomous vehicles employ sophisticated AI algorithms for mapping, path planning, and decision-making tasks. These algorithms require substantial computational power and efficient processing to handle driving lessons' complexity and real-time nature. AI (chipsets) is designed to deliver the high-performance computing needed to execute these complex algorithms efficiently, ensuring the smooth operation of autonomous vehicles.

Restraint: Lack of AI hardware experts and skilled workforce

Developing AI (chipsets) requires specialized knowledge and expertise in hardware design, architecture, and optimization for AI workloads. However, there is a need for more AI hardware experts who possess the necessary skills and experience to design and develop these chipsets. This expertise scarcity can slow the pace of innovation and product development in the AI (chipsets) market.

AI (chipsets) often incorporates specialized accelerators and custom architectures tailored for AI workloads. Designing and optimizing these components requires technical skills and knowledge that may be limited in the existing talent pool. The need for more skilled workers who can handle these specialized tasks can restrict the growth and development of AI (chipsets).

Opportunity: Surging demand for AI-based FPGA

FPGAs offer inherent flexibility and programmability compared to fixed-function ASICs (Application-Specific Integrated Circuits). This makes them suitable for handling diverse AI workloads and adapting to evolving AI algorithms. As AI models and algorithms continue to grow rapidly, the ability to reprogram and reconfigure FPGAs provides a competitive advantage in meeting the changing demands of AI applications.

Energy efficiency is critical in AI (chipsets), particularly in edge computing and IoT devices where power constraints exist. FPGAs can be power-optimized to deliver high performance per watt by leveraging parallel processing capabilities and fine-grained control over resources. The ability to optimize power consumption while maintaining performance is crucial for AI (chipsets), making AI-based FPGAs an attractive choice.

Challenge: Data privacy concerns in AI platforms

AI platforms often require access to large datasets, including personal and sensitive information. This raises concerns about data security and protection. If the data used for training AI models is not adequately safeguarded, it can be vulnerable to unauthorized access, breaches, or misuse. This can lead to privacy violations, identity theft, or other forms of data abuse.

AI platforms often involve the sharing of data across organizations or even international borders. However, data privacy regulations can vary across jurisdictions, making it challenging to ensure compliance and protect user privacy. Adhering to diverse legal frameworks while enabling data sharing and collaboration poses a significant challenge for AI (chipsets) companies.

Artificial Intelligence (AI) Chip Market Segment Insights

By Technology Insights

How did the machine learning segment dominate in the artificial intelligence (AI) chip market?

The machine learning segment is driven by the massive computational requirements of large language models and predictive analytics. The segment’s dominance is reinforced by the shift towards application-specific integrated circuits and high-bandwidth memory, which provide the necessary power efficiency and throughput for complex neural network workloads. As global enterprises scale their cloud infrastructure and AI server capacity, the demand for specialized ML-optimized hardware continues to outpace general-purpose silicon.

The generative AI segment is driven by the shifting demand from general-purpose silicon toward high-efficiency AI accelerators and ASICs. This transition is fueled by the dual requirement for massive training power and low-latency real-time inference, particularly as hyperscalers and edge-device manufacturers scale their infrastructure. The integration of GenAI into enterprise workflows and autonomous automotive systems is driving specific verticals, transforming hardware from a capital expense into a core strategic asset.

By Chip Type Insights

How did the graphics processing units segment account for the largest share in the artificial intelligence (AI) chip market?

The graphics processing units segment is driven by their parallel processing architecture, which is uniquely optimized for the high-volume matrix calculations essential to deep learning. This hardware advantage is fortified by a mature CUDA software ecosystem, creating a standard for developers that acts as a significant competitive moat. Furthermore, the integration of tensor cores and high-bandwidth memory (HBM3e) ensures that GPUs remain the primary workhorse for both training massive parameters and delivering low-latency inference.

The application-specific integrated circuits segment is driven by a shift spearheaded by cloud hyperscalers transitioning to proprietary hardware, such as Google's TPUs, to optimize operational costs and manage data-intensive workloads. Furthermore, the integration of ASICs into consumer electronics and automotive systems is accelerated by breakthroughs in 3D packaging and semiconductor nanowire technology.

By Processing Type Insights

How did the Edge AI segment account for the largest share in the artificial intelligence (AI) chip market?

The edge AI segment is driven by localized data processing. These chips eliminate cloud latency and address critical data privacy requirements, making them indispensable for autonomous vehicles, healthcare, and IoT devices. The market is increasingly pivoting toward neural processing units (NPUs) and specialized ASICs that deliver high performance-per-watt for battery-constrained environments. Ultimately, the synergy between 5G infrastructure and on-device intelligence is establishing Edge AI as the essential standard for real-time, secure, and energy-efficient digital ecosystems.

By Function Insights

How did the inference segment account for the largest share in the artificial intelligence (AI) chip market?

The insurance segment is driven by the transition from model training to large-scale production deployment, where real-time, low-latency processing is essential for Generative AI, autonomous systems, and healthcare diagnostics. The market is increasingly diversifying toward power-efficient NPUs and custom ASICs, such as Amazon’s Inferentia, to manage the high-volume recurring costs of "on-demand" intelligence.

By End User Insights

How did the BFSI segment account for the largest share in the artificial intelligence (AI) chip market?

The BFSI segment is driven by the critical transition from reactive to real-time proactive security and fraud detection. With machine learning now accounting for the sector's AI adoption, financial institutions are deploying specialized hardware to manage hyper-personalized customer experiences, high-frequency algorithmic trading, and automated credit underwriting. The current surge into Generative AI for complex compliance and report automation is further accelerating the demand for high-performance cloud-based infrastructure.

The healthcare segment is driven by the integration of high-performance ASICs and Edge AI into robotic surgery systems and real-time diagnostic devices, which is setting new benchmarks for procedural precision and administrative efficiency. Consequently, the sector's rapid transition toward personalized, data-driven medicine continues to attract record R&D investment, positioning healthcare as a primary driver of long-term demand for specialized AI silicon.

Some of the prominent players in the Artificial Intelligence (AI) Chip Market include:

Segments Covered in the Report

This report forecasts revenue growth at global, regional, and country levels and provides an analysis of the latest industry trends in each of the sub-segments from 2026 to 2035. For this study, Nova one advisor, Inc. has segmented the global Artificial Intelligence (AI) Chip market.

By Technology

- Machine learning

- Natural language processing

- Context-aware computing

- Computer vision

- Predictive analysis

By Chip Type

By Processing Type

By Function

By End-Users

- Manufacturing

- Healthcare

- Automotive

- Agriculture

- Retail

- Human Resources

- Marketing

- BFSI

- Government

- Others

By Region

- North America

- Europe

- Asia-Pacific

- Latin America

- Middle East & Africa (MEA)